Since the early works of Alan Turing and his mathematical formalisation of a polyvalent computation device (i.e., the Turing machine), a difference between machine architecture and the set of programs the machine is able to execute is established. This distinction was also present in Charles Babbage’s Analytical Engine. Although this machine was not built for technological reasons, Ada Byron is known to have published the first program intended to be carried out by such a machine. These two sides of the same coin are what we know today as hardware and software.

This distinction seems to get lost in the design of the ENIAC, one of the first electronic computers built by the United States during the Second World War. Programming was carried out by spinning switches and connecting the different elements of the computer directly, thus resulting in very complex programs and close to hardware. The work of the so called “ENIAC girls” as programmers of this computer is worth mentioning.

Precisely to reduce the programming effort, Kathleen Booth wrote the first assembly language at the Birkbeck College of London University in 1950. From that decade were the first programs that allow to increase productivity by gathering jobs to be run in batches. The evolution of these programs that manage the access to hardware services, will lead to the modern operating system over time.

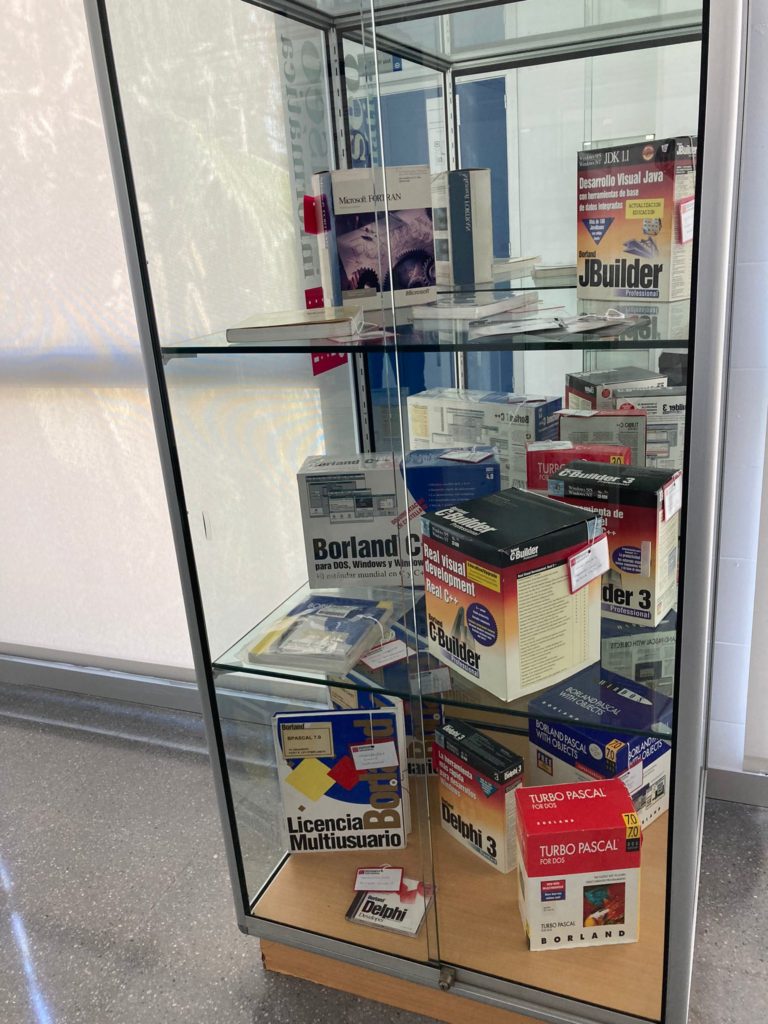

In the 1960’s the OS/360 was released, an operating system of initially 6KB, which evolved from batch processing to multitasking, and included a control process language. At the end of this decade, John Backus designed a compiler for FORTRAN, the first language of modern programming. FORTRAN appeared with the aim of reducing the programming effort of IBM 704, and is still being used in scientific computing applications. UNIX, the basis of most current operating systems, emerged in 1969, the same year of human moon landing.

In the 1970’s computing companies designed both hardware and applications, and both were distributed together. Different trials in the United States determined this distribution method illegal. This method forced the user to buy or rent the hardware when buying specific software. Thus, computing companies were obliged to license these two products separately.

Precisely to reduce the programming effort, Kathleen Booth wrote the first assembly language at the Birkbeck College of London University in 1950. From that decade were the first programs that allow to increase productivity by gathering jobs to be run in batches. The evolution of these programs that manage the access to hardware services, will lead to the modern operating system over time.

In the 1960’s the OS/360 was released, an operating system of initially 6KB, which evolved from batch processing to multitasking, and included a control process language. At the end of this decade, John Backus designed a compiler for FORTRAN, the first language of modern programming. FORTRAN appeared with the aim of reducing the programming effort of IBM 704, and is still being used in scientific computing applications. UNIX, the basis of most current operating systems, emerged in 1969, the same year of human moon landing.

In the 1970’s computing companies designed both hardware and applications, and both were distributed together. Different trials in the United States determined this distribution method illegal. This method forced the user to buy or rent the hardware when buying specific software. Thus, computing companies were obliged to license these two products separately.

At the end of the 1970’, the first computers which were sufficiently inexpensive to arrive to particular home users, emerged. This promotes an industry of applications development independently of the companies which built and commercialize the hardware. Applications were distributed by publishing their code in magazines, which forced users to input the code in their computers, or on magnetic tapes similar to those used for music reproduction and which were commonly used.

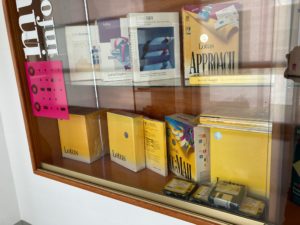

In the 80’s different factors imply profound changes: on the one hand, the emergence and sales success of the IBM personal computer (and the operating system MS-DOS) and, on the other hand, the emergence of cheaper clone versions of the IBM PC, which could run the same operating system and the same applications. On balance, this caused an explosion in the number of computers and an increase in demand of many types of applications. For example, WordPerfect for text processing, spreadsheets such as Lotus1-2-3, databases like dBase or CorelDraw for graphic design.

The ease of copying and the new and inexpensive storage devices (mainly flexible discs) allowed the non-authorized software copy and its rapid dissemination. The proliferation of books and manuals about applications, separated from official documentation, also date from that time.

The 80’s also experienced the ground for Free Software. The GNU project, initiated in 1983 by Richard Stallman, aimed at obtaining an operating system based on UNIX, compatible with it, but whose source code was public and free. Free Software today does not restrict to the operating system Linux, and includes applications such as the web browser Firefox or the office software suite LibreOffice.

The emergence of the Internet in the 90’s changed the world scenario. It allowed the most efficient and inexpensive distribution of codified digital data in history. The life of the CD-ROM as storage device was condemned. The increase of network bandwidth and the coming of devices like tablets and mobiles at the beginning of the XXI century, has led to the distribution and update of software, even for traditional computers, through app stores linked to a specific operating system.